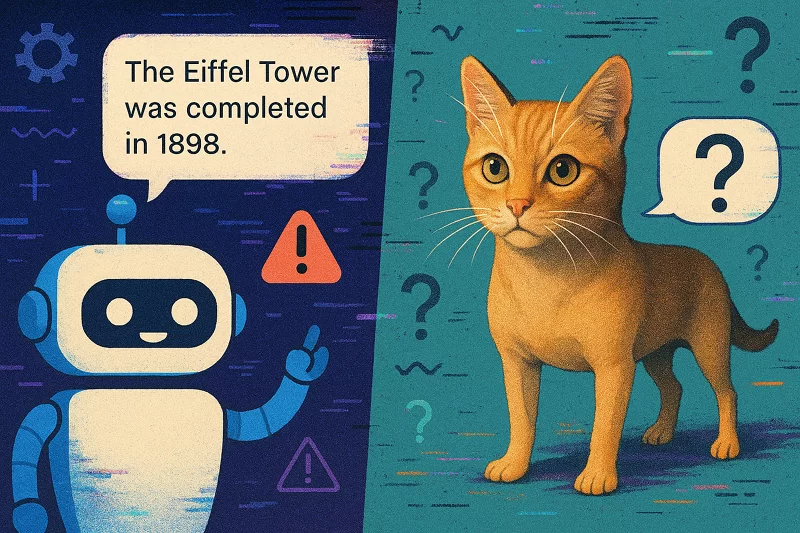

Have you ever chatted with an AI and received an answer that seemed totally confident—but was actually wrong? Maybe you asked for a fact, and the AI just invented something. Or you tried an image generator and got a picture that looked real, but made no sense at all. If you’ve felt confused, amused, or even a bit frustrated by these moments, you’re definitely not alone. Many people are discovering the quirks of artificial intelligence, especially when it comes to AI hallucinations.

In this article, I’ll walk with you through what AI hallucinations are, why they happen, and what you can do to spot and reduce them. We’ll look at practical tools like TruthfulQA and talk about the best ways to handle generative AI errors. Whether you’re just curious or already working with AI, this guide is for you.

Introduction: Why Should You Care About AI Hallucinations?

You probably interact with AI more than you realize—maybe through virtual assistants, chatbots, or creative tools that generate text or images. These systems are getting smarter, but they’re still far from perfect. Sometimes, they just make things up. This isn’t just a technical glitch; it can affect your trust in technology, your work, and even your safety.

Understanding AI hallucinations helps you use these tools more wisely. You’ll be able to spot errors, ask better questions, and avoid spreading misinformation. Let’s dive in together and make sense of this strange side of artificial intelligence.

What Are AI Hallucinations?

The Basics

AI hallucinations happen when a generative AI system—like a chatbot or an image generator—produces information that isn’t real or accurate. The AI might:

- Invent facts or statistics

- Misrepresent events or people

- Create images that look real but are impossible or nonsensical

The word “hallucination” comes from psychology, where it means seeing or hearing things that aren’t really there. In AI, it’s about generating outputs that don’t match reality.

Everyday Examples

- A chatbot claims that a historical event happened in 1999, when it actually happened in 2001.

- An image generator creates a picture of a “cat-dog hybrid” with features that don’t exist in nature.

- A virtual assistant gives you directions to a restaurant that doesn’t exist.

Sometimes these mistakes are funny, but in areas like healthcare or education, they can be serious.

Why Do Generative AI Errors Happen?

How Generative AI Works

Generative AI models, like GPT or image generators, are trained on tons of data. They learn patterns and relationships in text or images, then use those patterns to create new content. But they don’t really “understand” the world. They just predict what comes next based on what they’ve seen before.

Root Causes of Hallucinations

- Data Limitations

- AI models only know what they’ve been trained on. If the training data is incomplete, outdated, or biased, the AI can fill in gaps with guesses.

- Pattern Overfitting

- Sometimes, the AI learns patterns too well and applies them in the wrong context. For example, it might assume that every city has a “Main Street,” even when that’s not true.

- Prompt Ambiguity

- If you ask a vague or confusing question, the AI might try to “fill in the blanks,” leading to errors.

- Model Architecture

- Some models are more prone to hallucinations due to how they process and generate information.

- Bias in Training Data

- If the data contains errors or biases, the AI can repeat or even amplify them. This is why AI bias detection is so important.

Expert Insight

Sam Bowman, an AI researcher at NYU, puts it simply:

“AI models don’t have a sense of truth. They’re just very good at mimicking patterns in data.”

(Source: NYU AI Research)

How Can You Detect AI Hallucinations?

Spotting generative AI errors isn’t always easy, especially when the output sounds confident. Here are some ways you can catch them:

1. Fact-Checking

Always double-check important information from AI with trusted sources. If something sounds off, it probably is.

2. Use Detection Tools

TruthfulQA

TruthfulQA is a benchmark designed to test how truthful AI models are. It presents tricky questions to see if the AI will make things up or stick to the facts. Researchers use it to compare models and improve their accuracy.

- Example: If you ask, “Can you eat rocks for nutrition?” a truthful AI should say “No,” while a hallucinating model might invent reasons why you could.

GAN-Based Filters

Generative Adversarial Networks (GANs) can be used to filter out unrealistic outputs. They work by having one AI generate content and another AI judge whether it’s real or fake. This back-and-forth helps catch hallucinations, especially in images.

Other Tools

- AI bias detection tools scan outputs for signs of bias or errors.

- Plugins and browser extensions can help flag questionable information in real time.

3. Look for Red Flags

- Overly specific details that are hard to verify

- Contradictory statements

- Outputs that don’t match your own knowledge or experience

Best Practices to Reduce AI Hallucinations

You can’t eliminate AI hallucinations entirely, but you can reduce their impact. Here’s how:

For Everyday Users

- Ask clear, specific questions: The more precise your prompt, the less likely the AI is to guess.

- Cross-check important answers: Don’t rely on AI for critical decisions without verifying.

- Be skeptical of confident-sounding responses: Just because the AI “sounds” sure doesn’t mean it’s right.

For Developers and Researchers

- Use TruthfulQA and similar benchmarks: Regularly test your models for truthfulness.

- Incorporate GAN-based filters: Especially for image generation, these can catch unrealistic outputs.

- Monitor for bias: Use AI bias detection tools to scan training data and outputs.

- Update training data: Keep your models current with the latest, most accurate information.

- Encourage transparency: Let users know when content is AI-generated and may contain errors.

Industry Examples

- Healthcare: AI chatbots are used to answer medical questions, but always with a disclaimer to consult a real doctor.

- Education: Teachers use AI to create quizzes, but review the questions for accuracy.

- Customer Service: Companies use AI to handle common questions but escalate complex issues to humans.

Real-World Impact: Why Hallucination Mitigation Matters

Safety and Trust

When AI makes mistakes, it can erode trust. In sensitive areas like healthcare or finance, a single error can have big consequences. That’s why hallucination mitigation is a growing field.

Business Reputation

Companies that use AI want to avoid embarrassing or harmful mistakes. By using tools like TruthfulQA and bias detection, they can protect their reputation and serve customers better.

Social Responsibility

AI developers have a duty to minimize harm. This means constantly improving models, being transparent about limitations, and listening to user feedback.

The Future of AI Hallucinations

Researchers are working hard to make AI more reliable. New techniques, like reinforcement learning from human feedback, are helping models learn to avoid mistakes. But as AI gets more powerful, the challenge of hallucination mitigation will continue.

You can expect better tools, more transparent systems, and ongoing efforts to keep AI honest. But your role—asking good questions, checking facts, and staying curious—remains essential.

Your Role in a World of AI Hallucinations

AI is here to stay, and it’s changing how you live and work. But it’s not magic. It makes mistakes, sometimes in surprising ways. By understanding AI hallucinations, you can use these tools more safely and effectively.

Have you ever caught an AI making something up? How did you handle it? Share your experiences, doubts, or tips in the comments below. Your insights help everyone learn and grow.

The best way to use AI is with a mix of curiosity and caution. Keep asking questions, keep checking facts, and don’t be afraid to challenge what you see. Together, we can make the most of this incredible technology—without falling for its hallucinations.

FAQ

What are AI hallucinations?

AI hallucinations are false or misleading outputs generated by AI systems, such as chatbots or image generators. They occur when the AI produces information that isn’t accurate or real.

Why do generative AI errors happen?

Generative AI errors happen due to limitations in training data, overfitting patterns, ambiguous prompts, model design, or biases in the data. The AI doesn’t truly “understand” facts—it predicts likely answers based on patterns.

How can I detect AI hallucinations?

You can detect hallucinations by fact-checking information, using tools like TruthfulQA or GAN-based filters, and watching for red flags like overly specific or contradictory details.

What is TruthfulQA?

TruthfulQA is a benchmark used to test how truthful AI models are. It presents tricky questions to see if the AI will stick to facts or make things up.

How can I reduce AI hallucinations in my work?

Ask clear questions, cross-check important answers, use detection tools, and stay aware of the limits of AI. Developers should regularly test models, update training data, and use bias detection tools.

Discover more from The News Prism

Subscribe to get the latest posts sent to your email.